Coming from someone that desires news reporting that is more honest and thorough, the idea that robots and computer algorithms are generating immediate news stories should be a problem to all of us. We already live in a media culture that seems to salivate over gossip, celebrity and otherwise pointless nonsense. Much, that I’d personally consider newsworthy, already goes ignored. And now we are taking the human element further out of the process? A process that can only get worse if we continue allowing machines to further litter the landscape with repetitive and unverified crap.

This is possible because some kinds of reporting are formulaic. You take a publicly available source, crunch it down to the highlights, and translate it for readers using a few boiler plate connectors. Hopefully, this makes it more digestible.

I imagine the computer populating a Venn diagram. In one circle, it adds hard data (earnings, sports stats, earthquake readings), in another, a selection of journalistic clichés—and where the two intersect, an article is born.

The program chooses an article template, strings together sentences, and spices them up with catch phrases: It was a flawless day at the dish for the Giants. The tone is colorfully prosaic, but human enough.

The founder of Narrative Science, the company creating a lot of the computer generated news being discussed, predicts that upwards of 90% of the world’s news could be written by computers come 2030. Major companies and media outlets are already using the technology, and most are using it anonymously.

So as we hope for integrity in journalism, and for less of a monopoly at the top of the media spectrum, this signals for a high probability of the opposite. As we hope for more fact-checking and perspectives that can only be genuinely fleshed out by actual humans, we may get even more sensationalistic, exploitative and formulaic rubbish in the coming years.

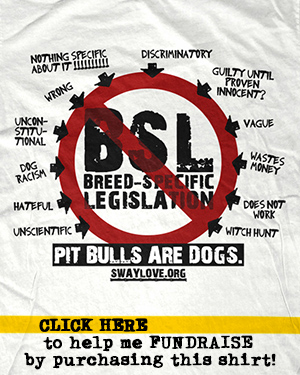

The phrase “Pit Bull” generates news. The attempted process of breed identification and using actual scientific evidence rarely plays a role in making those assessments. And this is now! Just imagine a software system loaded with catchphrases and templates, whose sole purpose is to quickly generate news, writing an article on an alleged “dog attack.” The thought couldn’t be much worse.